This article refers to a previous version of Arctic. The improvements we refer to below in “What Next?” have become an new incarnation of Arctic, now called ArcticDB.

ArcticDB’s core has been open sourced, please check it out!

Introduction

Man Group has been at the forefront of data science, long before it was a commonly used phrase. We know the inherent value that is to be found in building a world-leading technology platform, enabling us to ingest, analyse and ultimately extract alpha from datasets of varying shapes and sizes.

Early on when developing our platform, we made an active choice to fully commit to the Python data science and analytics ecosystem. This meant that our data storage solution needed to be both highly performant and feature rich; capable of storing both large scale batch data as well as high volume streaming tick data, whilst seamlessly integrating with Python.

With this in mind, we built Arctic, our Python-centric data science database. Open-sourced in 2015, Arctic integrates with Python as a first-class feature, is intuitive for both researchers and engineers to use, and fits in seamlessly with the rest of our Python stack. Today, Arctic is the database engine of choice for all front-office analytics at Man Group.

In this article, we would like to demonstrate how you can start using Arctic for highly intuitive data storage and retrieval.

Use Cases

Before kicking off with the tutorial, we'd like to be clear regarding some of the use cases that we feel best suit Arctic.

Arctic is a great choice for the following use cases:

- Bulk timeseries dataframe data: Arctic is primarily designed to store data that is timeseries indexed, a good example of which is demonstrated in the GDP example below. Furthermore, Arctic has been optimised for bulk data transfers. We find dataframes with a datetime index a good abstraction for the sort of data, and data sizes, that Arctic is designed for;

- Tick data: The Arctic Tick Store is designed to store high volume tick data such as exchange price feeds. Please note that whilst this article does not delve into the Tick Store in much detail, more details can be found in the Arctic documentation;

- Mixed timeseries & Python storage: Arctic also allows the storage of dictionary-nested timeseries data and can automatically pickle and un-pickle common Python data types. This means Arctic can function as a store for not only timeseries data, but also commonly used Python datastructures.

Arctic is not a great choice for the following use cases:

- Transactional, relational data: If you require support for database transactions or are storing relational data, Arctic is probably not the optimal choice for your data.

Initial Setup

Arctic has two prerequisites:

- Python: Arctic requires a functional Python environment. We won't delve into how to setup up a Python environment as there are plentiful resources on how to do this on the wider web;

- Mongo: Arctic also requires a functional Mongo cluster. For the purposes of this guide, we'll use Docker to create a simple single-node MongoDB instance.

To begin creating the required MongoDB instance, we'll first pull the latest Mongo docker image:

We can now start the container:

And that's it for Mongo! We'll come back to using the cluster in a moment, but for now let's go ahead and install Arctic:

And that's it! We now have a functional single-node Mongo cluster and Arctic installed in our local Python environment. Why don't we take it for a spin and see what we can do?

Basic Usage

Arctic provides a wrapper for handling connections to Mongo. To start with, we need to open a Python shell and instantiate our Arctic class against our Mongo cluster:

Now we have an Arctic instance, we can use it to manage the available libraries and symbols. Symbols are the atomic unit of data storage within Arctic and are predominantly used to store DataFrames containing timeseries data. Libraries, on the other hand, contain like symbols and are accessible via an Arctic instance. Note that both libraries and symbols are referenced via string identifiers.

With these required definitions out the way, let's have a look at what libraries already exist:

Unsurprisingly, there are no pre-existing libraries stored in our brand new Mongo cluster, so let's create one:

You may see a message that the library has been created, but `couldn't enable sharding`. If you do, don't worry! It just means we'll be storing the data unsharded, which is not an issue for small datasets.

Once a library is initialized, you can access it:

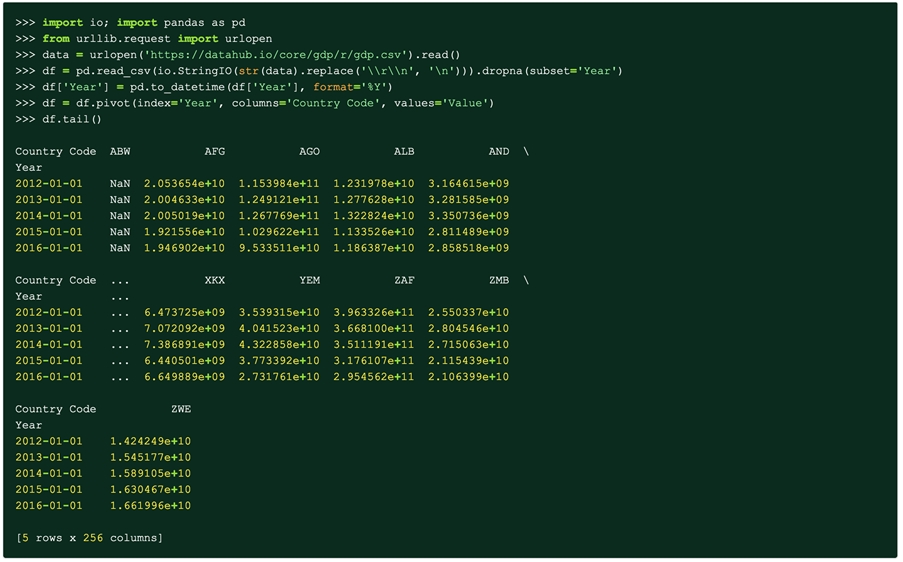

We now have access to the library and can manage the contained symbols. Let's go ahead and create a symbol by writing a DataFrame containing timeseries data to it! To do this, we're going to fetch a small example of some realistic, real world data - the Open Data GDP dataset:

We now have a simple DataFrame containing a datetime index. Each row represents a year and each column contains an individual timeseries of the nations' GDP over time. This structure is typical for data written to Arctic.

Arctic adheres to a philosophy known as Pandas-In, Pandas-Out, which means that all primitives either take or return Pandas DataFrames. This philosophy ensures that the API is immediately intuitive to both researchers and engineers alike.

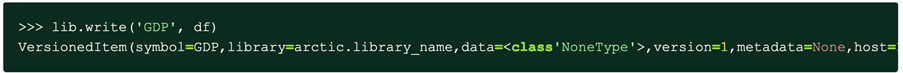

With that in mind, let's write out our DataFrame to Arctic:

The write primitive takes an input dataframe and writes it out as an indexed, structured timeseries.

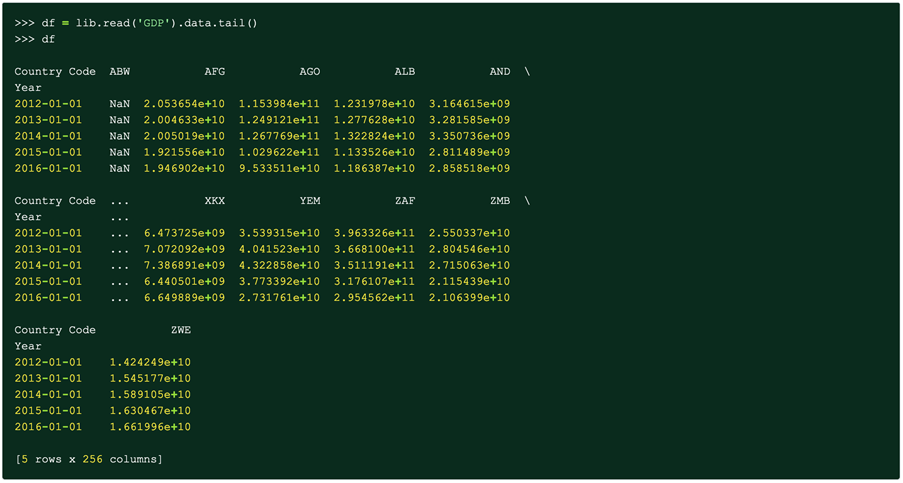

To read the data back, we can use the read primitive:

This is a limited and simplistic example of a read operation. Arctic also supports retrieving data by data range and by column subset. Furthermore, as Arctic also efficiently versions data, read allows you to pass in a point in time to rewind your data back to the specified point in time. For more information, please see the complete docs.

And there we have it! We're written our first timeseries DataFrame to Arctic, and pulled it back out!

What Next?

We've seen how easy it is to install and use Arctic. With just one package install and a few lines of Python, we've stored and retrieved a simple datetime indexed Pandas DataFrame. In addition to the functionality covered in the introduction, Arctic also offers a host of more advanced features including:

- Support for streaming tick data;

- Versioning, allowing modifications to be tracked through time and reverted;

- Bulk data transfers of dataframes containing hundreds of thousands of rows.

The Arctic story doesn't end there though. At Man Group, we're continuing to invest heavily in our data platform, and that includes Arctic. Following the success of Arctic both internally within Man Group and externally, we've been in the process of a ground-up rewrite designed to ensure that Arctic is ready for the next generation of data challenges that Man Group faces.

The next generation of Arctic offers the same, intuitive Python-centric API whilst also utilising a custom C++ storage engine and storage format, resulting in a product that offers:

- Fast setup: The next iteration of Arctic only requires an available S3 instance and a Python client. MongoDB is not required;

- Fast performance: World leading performance -10x-100x faster than the current Arctic version;

- Powerful: Advanced Pandas-like analytics that intelligently utilises the Arctic storage format;

- Enterprise ready: Enterprise functionality including ACL's, replication and incremental backups.

For more information on what the next generation of Arctic will offer, please contact us at arcticdb@man.com.

You are now leaving Man Group’s website

You are leaving Man Group’s website and entering a third-party website that is not controlled, maintained, or monitored by Man Group. Man Group is not responsible for the content or availability of the third-party website. By leaving Man Group’s website, you will be subject to the third-party website’s terms, policies and/or notices, including those related to privacy and security, as applicable.