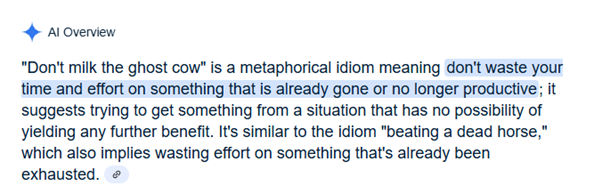

If you’re wondering when the ‘ghost cow’ meme passed you by, you’re not alone. In a valiant attempt to bring order to chaos when connecting disparate information, we’ve all seen artificial intelligence (AI) agents confidently generate plausible – but nonsensical – responses.

But how did we get to this point? We’re living through an information avalanche. The world exchanges more data than ever: more than 11,800 data centres operate around the globe, reaching 122.2 gigawatts in capacity in 2024.

All this knowledge creates a paradox. In the investment world, a brief survey of fund managers, asset allocators and investment professionals reveals that with more information, it’s increasingly difficult to separate insight from ‘noise’ and to generate alpha.

Machines can help. LLMs – or large language models – are an important milestone on the path towards artificial ‘general’ intelligence. Trained to predict the next token in a sequence based on patterns in their massive training dataset consisting of ‘terabytes’ of text data, these versatile models can perform generic tasks and are able to juggle billions of parameters. Through ‘agentic AI’ – systems equipped by AI agents and capable of making decisions and leveraging our proprietary research and development infrastructure – LLMs promise us the capacity not only to sort data but to produce trade recommendations. Man Group’s own model, Alpha GPT, is designed to potentially deliver 10x the actionable trade recommendations of an analyst team in a fraction of the time.

So far, so good. But can we totally trust our models? As we see above, LLMs are inclined to ‘hallucinate’ or ‘fill in the blanks’. Unlike humans, they don't instinctively know the difference between an educated guess and true reliability.

So, how can we solve for hallucination?

Addressing this issue requires better and more comprehensive data quality. As models exist for longer and become more experienced, we may see more accurate datasets, with contradictory or false information increasingly removed during pre-training.

Partly, this will be delivered via reinforcement learning from human feedback (RLHF). This can fine-tune models based on human preferences for truthful, accurate responses.

One such example, constitutional AI, aims to incorporate human values and ethics – such as honesty or fairness . It allows us to train models to be more honest about uncertainty and to avoid making up information when they don't know something.

Meanwhile, retrieval-augmented generation supports grounding responses in facts, by connecting LLMs to reliable databases, allowing them to cite specific sources rather than generating from memory alone. Ultimately, they target advancements in delivering real-time information. And search engines or APIs can source current information rather than relying on training data cutoffs.

We may see enhancements in the systems that assess the reliability of generated content. Beyond traditional fact-checkers, human-in-the-loop approaches employ real people to review for high-stakes applications.

Within the models themselves, ‘uncertainty quantification’ helps develop better methods for models to estimate their own confidence levels, while ensemble methods use multiple models and compare outputs to identify potential hallucinations. We can also predict the advent of enhanced calibration; models which better align their confidence with the truth.

Crucially, we may see more human-esque expressions of uncertainty, such as "I don't know" or "I'm not certain", enter the prompt system design, when appropriate.

LLMs are already improving rapidly, and the future heralds much more accurate responses which combine human intuition with machine-like efficiency.

In the meantime, perhaps it is best to engage in ‘chain-of thought prompting’: asking models to show their reasoning, making it easier to spot potential errors. And, by breaking down queries into smaller, more verifiable steps, we can hope for more accurate and reliable responses.

This article was inspired by a talk by Ziang Fang at the Man Alternative Investing Symposium.

Image sourced from: https://www.tomsguide.com/ai/google-is-hallucinating-idioms-these-are-the-five-most-hilarious-we-found

Please refer to the Glossary [here] for investment terms.

All investments are subject to market risk, including the possible loss of principal. It is not possible to invest directly in an index. Alternative investments can involve significant additional risks. Past performance is no guarantee of future results.

You are now leaving Man Group’s website

You are leaving Man Group’s website and entering a third-party website that is not controlled, maintained, or monitored by Man Group. Man Group is not responsible for the content or availability of the third-party website. By leaving Man Group’s website, you will be subject to the third-party website’s terms, policies and/or notices, including those related to privacy and security, as applicable.